Google's 'Mind-Reading' AI

Imagine an AI so advanced that it can delve into the neural pathways of our brains to discern the song we listen to. In an astounding feat of technological innovation, Google, with Osaka University, has created a 'mind-reading' AI system capable of this endeavor.

This breakthrough finds its roots in the world of science, where researchers have historically 'reconstructed' sounds from brain activity, spanning from the intricacies of human speech to the euphony of bird songs and the distinctive neigh of horses. Yet, recreating music from brain signals remained an uncharted territory until now.

Dubbed 'Brain2Music', this innovative AI pipeline captures brain imaging data, enabling the creation of music akin to short excerpts of songs an individual was tuning into during the brain scan. This revelation was outlined in a paper released on July 20 in the preprint database arXiv, though it awaits peer review.

How does this remarkable system work?

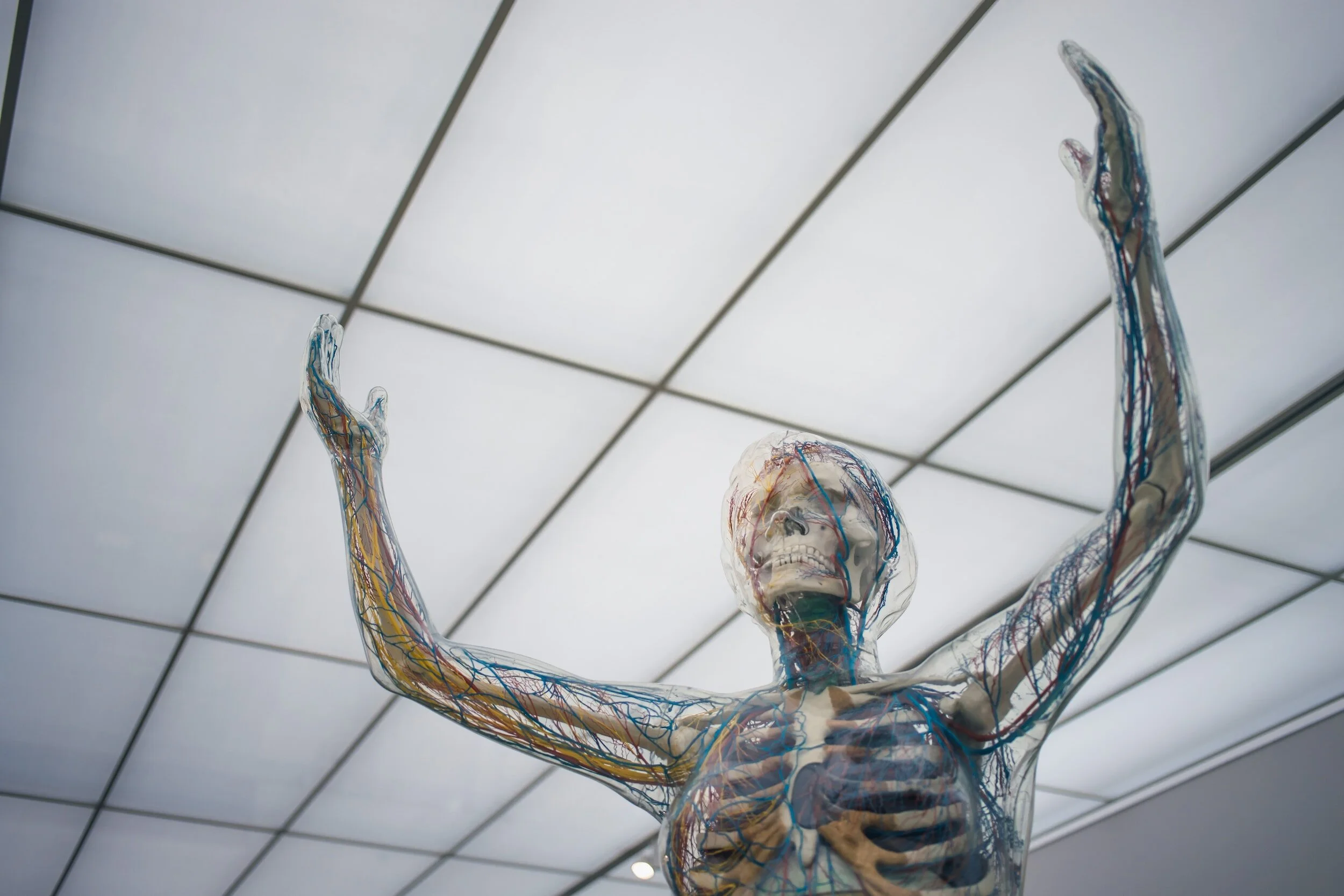

The magic unfolds through functional magnetic resonance imaging (fMRI). This technology tracks oxygen-rich blood flow to the brain, pinpointing regions in hyperactivity. Researchers collected data from five individuals as they resonated with 15-second music clips from an array of genres.

The AI was meticulously trained, mapping the nuances of music, from its mood to rhythm, with the participants' distinctive brain signals. Each AI model was personalized, tailoring it to the idiosyncratic brain patterns and musical facets of an individual.

Following this, the neural data was transformed by the AI, emulating musical elements of the original song fragments. These insights were then channeled into another AI model by Google, named MusicLM. Initially designed to produce music from textual descriptors, MusicLM masterfully crafted musical segments, astonishingly reminiscent of the original snippets, albeit with varying precision.

Delving deeper, Timo Denk, a software engineer at Google in Switzerland, elucidated that while the mood of the AI-reconstructed music had an accuracy of roughly 60% with the original, the genre and instrumentation alignments were significantly higher, occasionally outperforming mere chance.

But what's the broader objective of this groundbreaking endeavor?

Beyond the marvel of AI capabilities, it's about comprehending the intricate dance between our brain and music. As co-author Yu Takagi, an expert in computational neuroscience and AI at Osaka University in Japan elucidates, the primary auditory cortex is activated when we listen to tunes, translating ear signals into sound. Further, another part of the brain, the lateral prefrontal cortex, plays a critical role in deciphering music meanings and might be vital for tasks such as problem-solving and planning.

Interestingly, previous research has shed light on how the prefrontal cortex's activity alters when rappers improvise, providing potential avenues for future studies.

As Google and Osaka University forge ahead, they're not just content with the present. They envision a future where AI might reconstruct melodies that reside purely in our imagination, transcending the realms of what we physically hear. As the boundaries between technology and neuroscience blur, we inch closer to understanding the enigmatic relationship between our brain and the symphony of music.